Emotion recognition from video involves many nuanced challenges. Models that depend exclusively on either visual or audio signals often miss the intricate interplay between these modalities, leading to misinterpretations of emotional content. A key difficulty is reliably combining visual cues—such as facial expressions or body language—with auditory signals like tone or intonation. Many existing systems also lack the capability to explain their decision-making process, which makes it hard to understand how a specific emotion is detected. Furthermore, these models can sometimes generate reasoning that does not directly reflect the input data, or they might fail to fully utilize important audio details. These issues become even more pronounced when models encounter unfamiliar scenarios, emphasizing the need for a more robust and interpretable approach to multimodal emotion recognition.

Introducing R1-Omni by Alibaba Researchers

In their recent work, Alibaba Researchers present R1-Omni, an application of Reinforcement Learning with Verifiable Reward (RLVR) to an omni-multimodal large language model tailored for emotion recognition. R1-Omni builds on the established HumanOmni framework and applies RLVR to fine-tune the model for handling both video and audio data. The method begins with a cold start phase, where the model is pre-trained using a combined dataset from Explainable Multimodal Emotion Reasoning (EMER) and a manually annotated dataset. This initial training helps the model learn basic reasoning skills before being refined with RLVR. By integrating a rule-based reward mechanism into the training process, R1-Omni is optimized not only for accurate emotion prediction but also for generating clear and interpretable explanations that describe how visual and auditory information interact.

Technical Insights and Benefits of the Approach

At the core of R1-Omni’s design is the integration of Reinforcement Learning with Verifiable Rewards (RLVR) and Group Relative Policy Optimization (GRPO). RLVR replaces the need for subjective human feedback with a verifiable reward function that assesses the model’s output against objective criteria. The reward system is straightforward: if the model’s emotion prediction matches the ground truth, it receives a reward of 1; otherwise, it receives 0. Additionally, a format reward ensures that the output adheres to a specified structure, where the reasoning process is clearly separated from the final prediction by designated tags.

GRPO further refines the training process by comparing groups of candidate responses, allowing the model to identify and favor those with more coherent and interpretable reasoning. This mechanism helps minimize the occurrence of unsupported or misaligned reasoning while improving the overall quality of the predictions. Together, these technical strategies contribute to enhanced reasoning, a better understanding of multimodal inputs, and improved performance, particularly when the model is tested on data it has not seen before.

Experimental Results and Key Observations

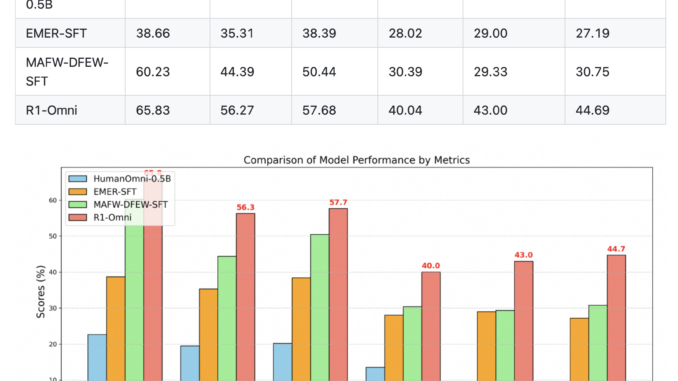

The study presents a comprehensive set of experiments that compare R1-Omni with several baseline models, including the original HumanOmni-0.5B and models trained with supervised fine-tuning (SFT) on the EMER and MAFW-DFEW datasets. On the DFEW dataset, R1-Omni achieves an Unweighted Average Recall (UAR) of 65.83% and a Weighted Average Recall (WAR) of 56.27%. These scores are notably higher than those obtained with other approaches. Similarly, on the MAFW dataset, R1-Omni demonstrates improved performance, highlighting its capability to classify emotions accurately across various classes.

An additional strength of R1-Omni is its ability to generate detailed and coherent reasoning processes. Visualization examples provided in the study show that, compared to other models, R1-Omni offers explanations that better reflect how visual and audio cues contribute to the prediction. The model also shows strong generalization capabilities when evaluated on the RAVDESS dataset—a collection featuring professional actors and standardized speech. This suggests that the model is capable of adapting to different types of input data while maintaining a consistent level of performance.

Concluding Thoughts and Future Directions

In summary, R1-Omni represents a thoughtful approach to the challenge of multimodal emotion recognition. By leveraging Reinforcement Learning with Verifiable Rewards, the model is refined not only to predict emotions with greater accuracy but also to articulate the reasoning behind its decisions. This approach helps address some of the long-standing issues in the field, such as the integration of multimodal data and the interpretability of model outputs.

Despite its advances, R1-Omni still faces challenges. For instance, improving subtitle recognition and reducing instances of unsupported reasoning remain areas for further exploration. Future research may focus on enhancing the underlying model, refining the integration of audio cues, and deepening the model’s reasoning capabilities to better mimic the subtlety of human emotional understanding.

Overall, R1-Omni offers a promising framework that balances technical rigor with the need for interpretability, contributing valuable insights into the development of more transparent and effective multimodal emotion recognition systems.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

🚨 Meet Parlant: An LLM-first conversational AI framework designed to provide developers with the control and precision they need over their AI customer service agents, utilizing behavioral guidelines and runtime supervision. 🔧 🎛️ It’s operated using an easy-to-use CLI 📟 and native client SDKs in Python and TypeScript 📦.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment