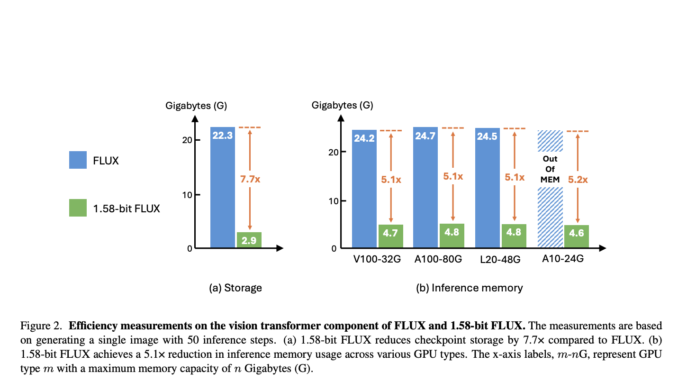

ByteDance Research Introduces 1.58-bit FLUX: A New AI Approach that Gets 99.5% of the Transformer Parameters Quantized to 1.58 bits

Vision Transformers (ViTs) have become a cornerstone in computer vision, offering strong performance and adaptability. However, their large size and computational demands create challenges, particularly for deployment on devices with limited resources. Models like FLUX […]